IT environments can get complex. So, it’s only natural that trying to mimic a production environment as close as possible in a test environment (why else would you build it?) can get equally complex. You often don’t have the same exact resources available for test and dev as you do for production, which makes it additionally challenging. As a developer (and tester), you don’t want to be hindered by the shortcomings of the infrastructure (hence the popularity of consuming public cloud resources on-demand). Equally, IT infrastructure folks want to deliver the best experience possible for their internal consumers, so they look for ways to introduce more flexibility into the environment.

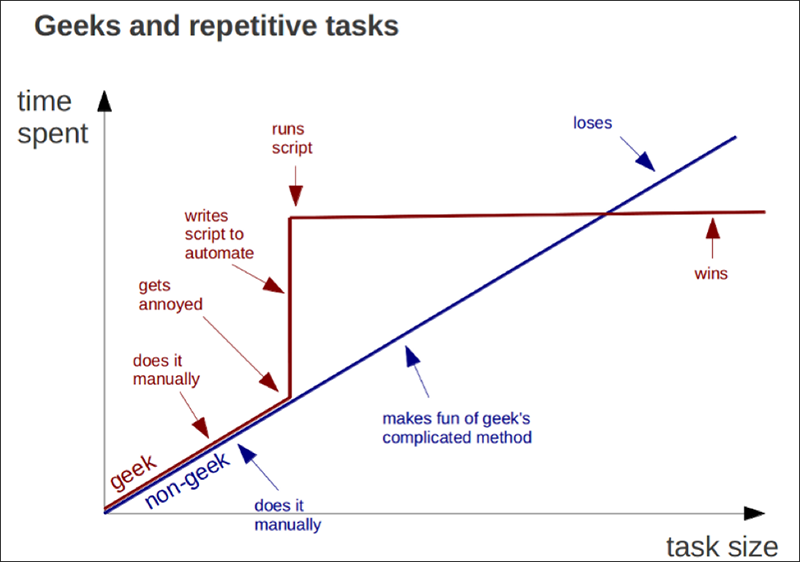

One aspect of this can be automation. It is a way to potentially speed up delivery of resources, ensure a consistent service, reduce errors, and avoid additional interruptions, leading to increased productivity on both ends of the equation.

So what if there was hidden potential deep inside your IT infrastructure that could help out in this regard? I’m talking about backup data — data that typically sits unused in your environment, consuming space and resources until called upon to provide restores that will (fingers crossed) return you back to operation and a fully-employed status.

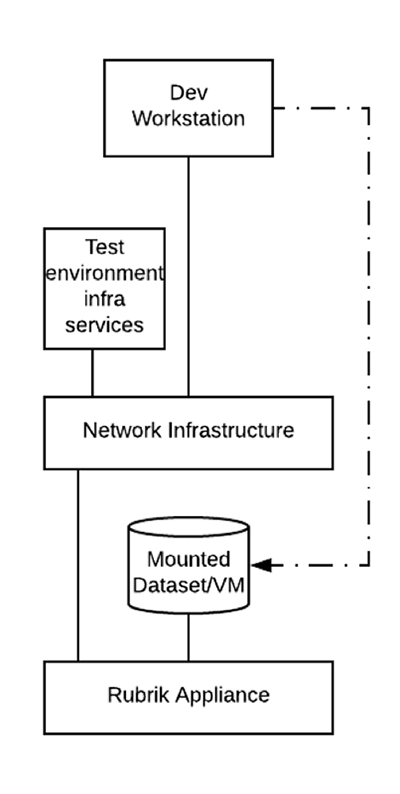

One of the unique benefits of Rubrik is the ability to instantly restore data using our Live Mount* capabilities. This is can also be employed in broader terms, making backup data that normally sits idle part of your test/dev workflow. Since this is possible with both older backup versions and the latest backup copies, you now have the potential to create a test environment that uses the latest production data for you to work against. This results in the best of both worlds; you are using datasets as close to production as possible, and you are using non-production resources to host these datasets, avoiding interference and potential performance downsides.

Once the application (as a virtual machine) is spun up and connected to the network, it can integrate with other required services in the test infrastructure (DNS, AD, etc.), and the developer or tester can now easily access the environment to perform his/her tasks.

*Live Mount allows us to use the performance and capacity tiers of the Rubrik appliances to host the actual data instead of immediately restoring data back to the production storage system, where it would consume valuable resources and content with production workloads. In the case of VMware, for example, we would expose the Rubrik appliance as an NFSv3 datastore to the ESXi host, where the backup copy of the VM would be powered up.

Managing Test/Dev Environments

Manually turning up such an environment is already a step in the right direction, but another challenge that typically appears with test/dev environments is how to manage them, including spinning them up and tearing them down. If we can make consuming these environments and the data that resides in them easier, managing the lifecycle should ultimately become easier too. One way to look at that is by allowing you to integrate/automate with your existing toolchain (e.g. the tool/scripting language of your choice).

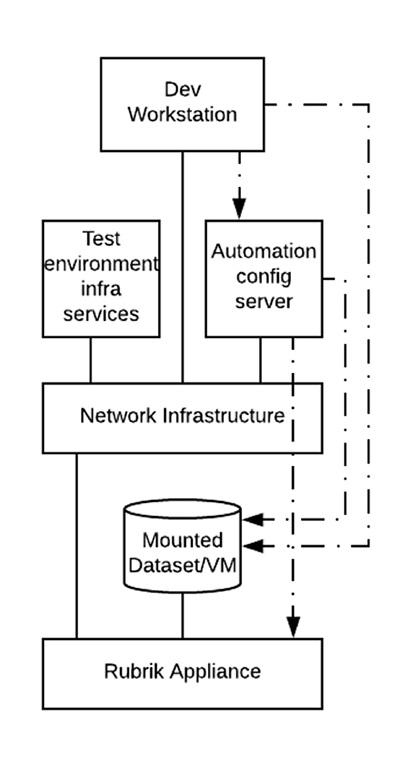

This is where our consolidated approach pays dividends. Since we control the entire stack, both the software and hardware, we can present it under one unified RESTful API and allow you to consume it in a straightforward way.

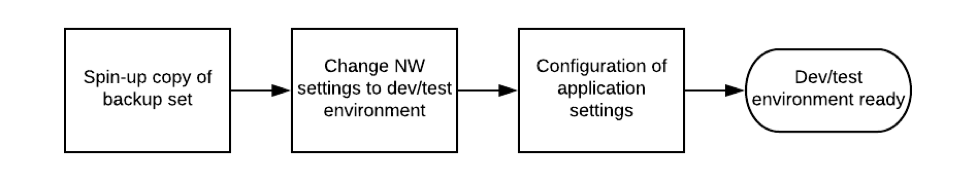

When using an automated approach, the developer makes the decision to spin up a new environment by using his/her tools of choice. Assuming we are using some sort of configuration environment, this will reach out to the Rubrik appliance, instruct Rubrik to spin up a copy of the application (or dataset), change the network settings on the VM, register it in the test environment, change variables on the application if needed, and instruct the dev that his/her environment is ready for consumption. All without any infrastructure team interaction.

Infrastructure As Code

This process potentially leads us down the path of infrastructure as code, which is an approach to infrastructure automation based on practices gleaned from software development. It emphasizes consistent, repeatable processes for provisioning and managing infrastructure and its configuration. Changes are typically made via configuration files (in YAML for example) and then rolled out to systems (either directly or via an automation config server type setup) through unattended processes that include thorough validation. The premise is that modern tooling (Chef, Puppet, Ansible, Terraform, Packer, etc.) can treat infrastructure as if it were software and data. This allows people to use software development methods such as versioning, automated testing, and deployment orchestration to manage infrastructure. It also opens the door to use development practices such as test-driven development (TDD), continuous integration (CI), and continuous delivery (CD).

Logical example of high-level process steps:

If you are interested in learning more about our API powered automation capabilities, my colleague Joshua Stenhouse has a treasure trove of content on his blog or you can check out our Rubrik’s GitHub page.