File Data Generation

Part 2 in this series dealt with filesystem tree generation. In this part, we will look into the data of the files themselves and how they relate to tree generation.

Objectives:

Simulator should be able to support generating data with the following requirements:

- Should be random data, but configurable - say produce data with given compressibility, entropy, deduplication, etc.

- Just like a filesystem tree, generate the same data for the same initial seed and configurations.

- Allow for incremental changes within the files between successive generations. For example, change files by 5% by including inserts, deletes, and overwrites of new data over existing data that was generated for a previous generation.

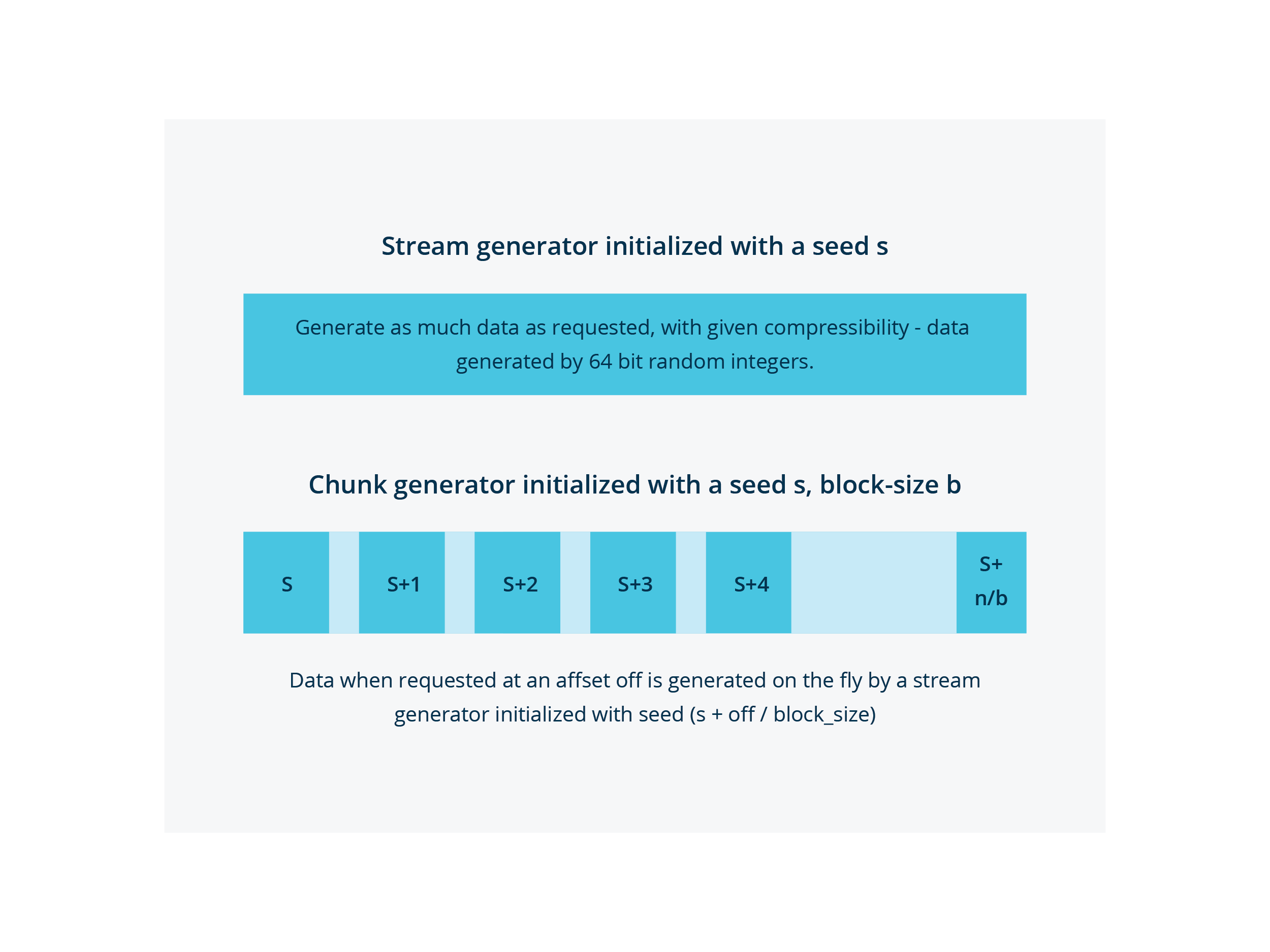

- Support for sparse files - files with holes in the data.

The basic building block of the data generation is a pseudo-random StreamGenerator which can generate data as a stream after initializing it with a seed. Building on it is ChunkGenerator which generates blocks of data each with the next incremental seed. This ensures when a file is initialized with seed, it generates the same data every time for a given offset - enabling us to do random reads on a file.

Enabling incremental changes to the file

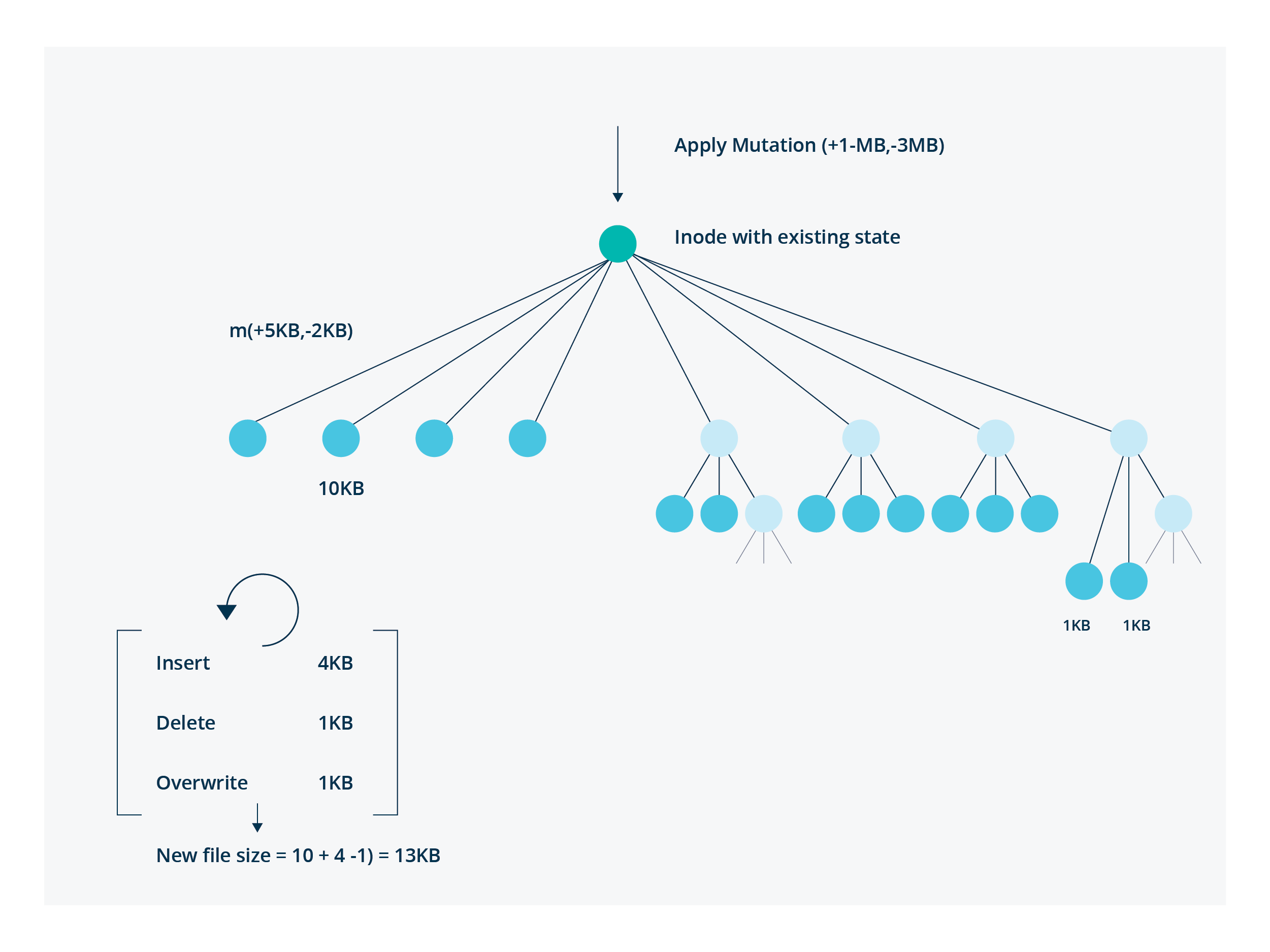

When a mutation of the form (bytesToAdd, bytesToDelete) is sent to a FileGenerator, it first changes the mutation to form (bytesToInsert, bytesToOverwrite, bytesToDelete) and then applies the following algorithm in the file generator to get data satisfying the above constraints. For example, (Add 10MB, Del 2MB) is transformed to (Insert 9MB, Overwrite 1MB, Delete 1MB) with net file size addition by 8MB.

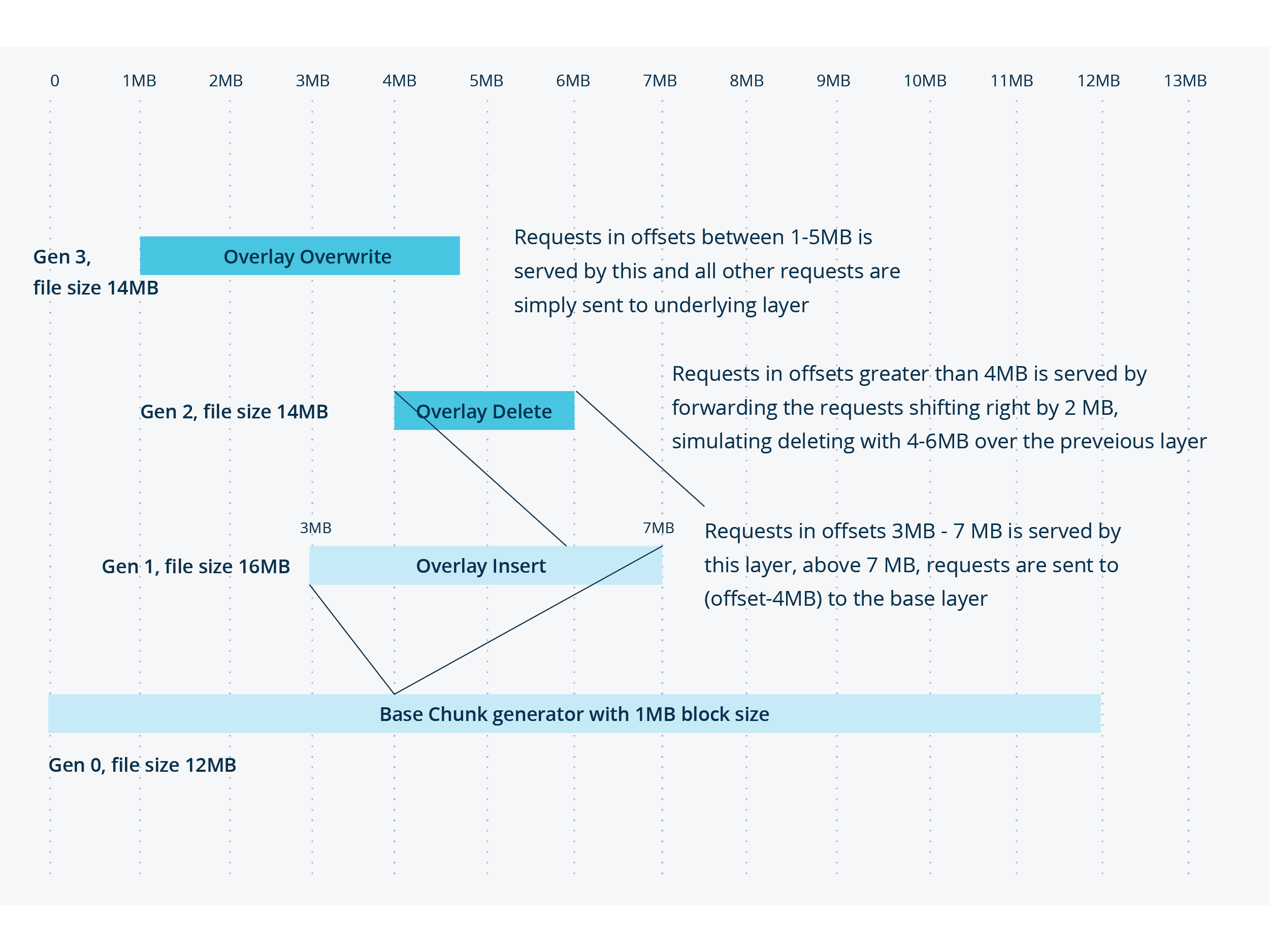

To do this, we create an OverlayChunkGenerator, which is a composition of chunk generators as basic blocks. It has exclusive ownership of a specific interval, and hands over ownership to underlying chunk generators for other intervals. This allows us to create different data for the next generation, with a mix of Insert, Overwrite and Delete simulations over the data of the previous generation for a given percentage of changes!

The above diagram shows how it can be done with a layering model, enabling inserts, deletes, and overwrites for a new generation, building over the file data of the previous generation.

Many times, we don’t want all inserts and overwrites to go to one place. For example, 1000MB overwrites if sprinkled around parts of the file will be more powerful, but the above algorithm runs O(n) for every new overwrite block over the previous generation. To be more performant in these cases, we may have multiple chunks owned by a single layer, and do binary searches within the intervals to find the offsets to which the corresponding layer can serve data.

Other challenges

There are other interesting challenges we encountered:

- There sometimes may be a need to improve performance (especially filesystem scan). To address this, we can implement caching for Inodes and periodic garbage collection to prune the nodes if the total number of nodes in the system has crossed a threshold.

- Generating hardlinks in this model is extremely difficult. Since every inode with multiple links has the attribute n_links - the number of links pointing to the same inode, so when generating an inode, we should decide all the inodes in the entire filesystem tree, that has the same inode-number. The solution involved using information based on equivalence classes to know the information on inodes beforehand. We should probably have a blog post just going into the details on how this is done for hardlinks!

Deployment

The filesystem is exposed over:

- Posix interface - the primary interface exposed. It can be used as a library when exposed over other interfaces or when unit testing.

- Thrift server - builds over POSIX and exposes as thrift server so Rubrik Cluster and other services ingest data from the server.

- FUSE - builds on POSIX to implement FUSE can be served over NFS, SMB and act as a quick data generator in Linux, also helps us to see which files are present.

Remember where we began in Part 1, where we want to have a simple YAML config file to be able to deploy the simulator, that YAML file finally turned out to look something like this:

# Yaml configuration file sent to the simulator

hosts:

- count: 10 # Simulating 10 filesystem simulators

parameters:

module: Complex # There are other modules, say for generating hardlinks

size: 10737418240 # 10GB filesystem

initial_time: 2020-01-01T00:00:00 # Starting time of all files generated

generation_period: 48h # Window of time to allow the changed time of files

hange_rate: 0.05

growth_rate: 0.01 # (change_rate - growth_rate) = delete_rate

attribute_change_rate: 0.05 # change of mode, ownership, etc.

seed_start: 12 # This and the above parameters will always generate same data

directory_fanout: 40 # Avg directories per directory

files: 1000 # Total number of files

directories: 100 # Total number of directories

symlink_probability: 0.05 # Probability of symbolic links in an inode

hole_probability: 0.10 # Probability of holes in files

Verifiability

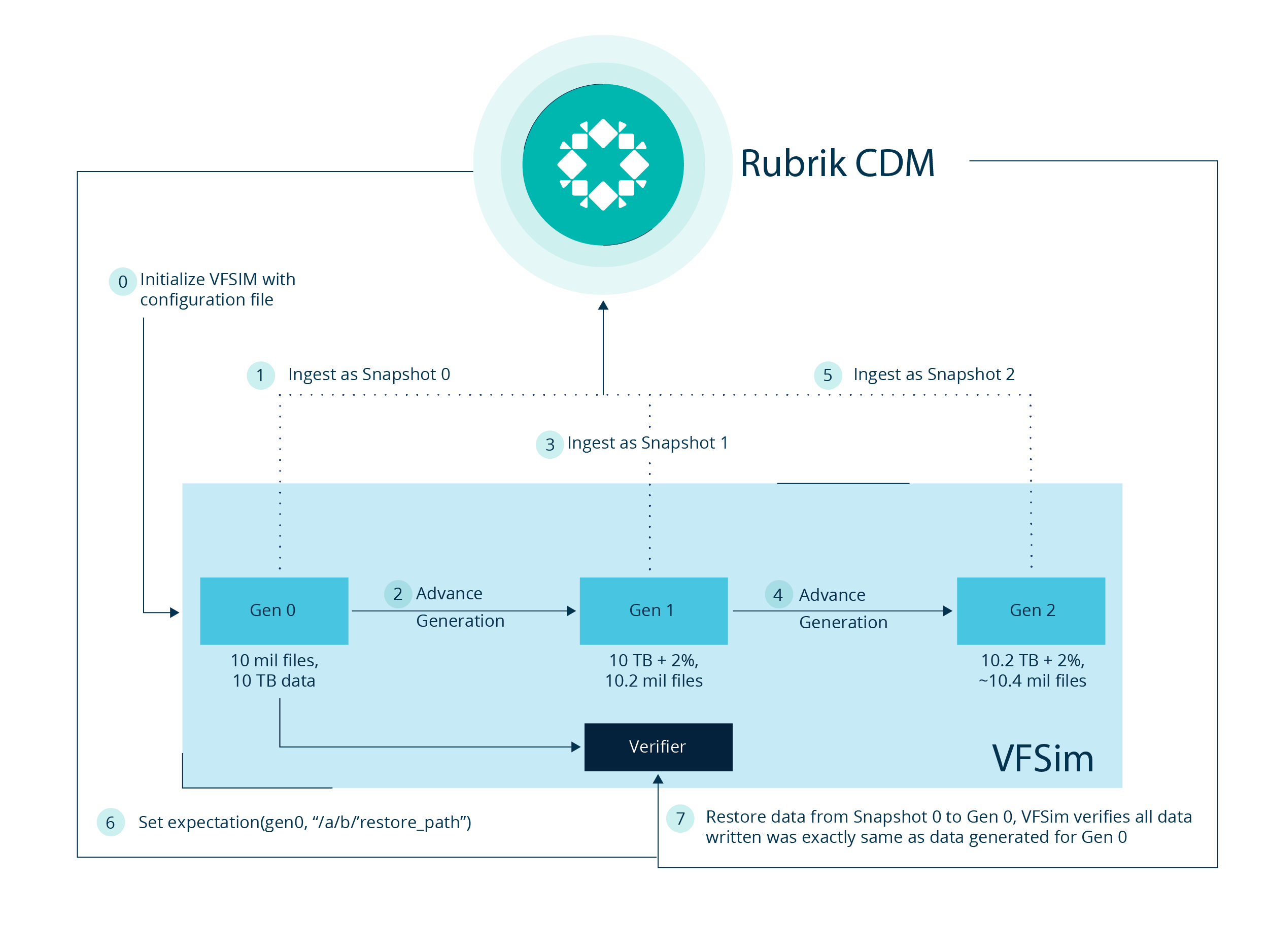

To be able to verify, we support the restoration of files and directories back to the simulator. The simulator also implements all the write APIs from POSIX, however the simulator still should know which (generation, paths) is being written. For this, we have implemented expectations over the simulator.

Each expectation is of the form (generationId, source_path, restore_path) which tells the simulator to expect writes in restore_path from the files it has created in generationId from source_path. When writes arrive, the simulator first checks if the write is within the paths set in expectations, if yes, it regenerates the data of generation set in expectation for the specific write and compares it with the data written. This gives a byte-level verifiability with the data.

The below diagram shows the first image but modified with expectations from CDM to be able to do restore on gen 0 for snapshot 0 in the simulator at “restore_path.”

And given we implement all the write interfaces - create a file, write attributes, write data, etc, Rubrik CDM can restore the data to simulator just like it can restore to a real host and while doing that, use the power of verifiability from the simulator.