Wachy is a new Linux performance debugging tool that Rubrik recently released as open source. It enables interesting new ways of understanding performance by tracing arbitrary compiled binaries and functions with no code changes. This blog post briefly outlines various performance debugging tools that we commonly use, and the advantages and disadvantages of each. Then, we discuss why and how we built wachy. We have been using wachy internally over the past few months to explore and improve the performance of our system, and we hope others find it as useful as we do in building fast software.

Standard Performance Tools

As part of the Atlas team, we are responsible for our homegrown petabyte-scale distributed file system. Almost all of the data in our Converged Data Management platform flows through us, so it’s no surprise that we care a lot about performance. The following are some of the tools in our performance debugging toolkit that we typically reach for. This list focuses on debugging our user space code itself, rather than system resources like disk utilization/link bandwidth/CPU utilization, as those are usually easier and quicker to understand.

Metrics

We have a robust metrics library and platform. This enables easy examination of historical data and we have metrics at several different layers to narrow down performance issues. However, metrics are aggregated thus cannot debug all issues, adding new metrics requires code changes, and while the overhead of metrics is fairly low, it is non-zero and not feasible to have for all functions.

Tracing

We have a homegrown tracing library that integrates with various sinks, including opentracing and raw files. Unlike metrics, this is not aggregated, allowing much more fine-grained debugging to understand what is happening at the level of each operation. The downsides are that this has a higher overhead than metrics, so we have to either sample or limit granularity, and it requires postprocessing if we want to understand aggregate behavior.

Perf and gperftools

CPU sampling profilers, combined with a flamegraph visualization, are great for finding CPU-bound operations. This has been helpful in some cases where we have accidentally introduced an expensive operation in the hot path. Unfortunately for us, most of our latency comes from blocking operations, primarily disk IO, network IO, and mutexes, which simply do not show up in a representative manner with these tools1.

Gdb backtraces and poor man's profiler

Simply gathering a few stack traces can be amazingly effective for understanding where threads are spending their time. However, this is a rather intrusive approach requiring the program to be paused for some time and carries some risk. Furthermore, we make very liberal use of threads in our filesystem and as a result, we have faced some challenges with gdb which we haven’t fully been able to track down - sometimes getting backtraces just hangs, and even when it succeeds, the samples don’t seem to be representative of the proportion of time spent2.

These common performance tools, along with a good understanding of the system and our architecture, has enabled us to find and fix our performance issues. However, in many cases it took far longer than we would like. It was too difficult to answer what I consider a simple and fundamental question: how long is a particular function taking? This is straightforward to answer if that function is instrumented with metrics or tracing, but if not, it is almost always very time-consuming. And good luck answering in a customer/production environment, where we can’t make any code changes (in most cases). It’s neither feasible nor desired to instrument every possible function of interest, because as mentioned above it will have an associated performance impact.

Elixirs, Excalibur and eBPF

Our file system runs exclusively on Linux. A few years ago, the Linux kernel gained support for an exciting new technology called eBPF. This allows safely running user-supplied functions at pretty much arbitrary probe points in a kernel context. Much has been written about how amazing this feature is for kernel observability. But as someone who writes user space code, what I find even more amazing is the support for tracing arbitrary user space programs.

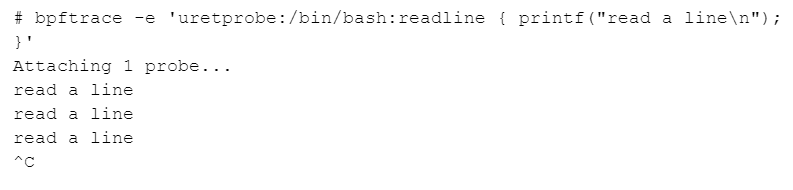

Let’s take a simple example to understand this in practice. We will use bpftrace, which is an approachable high-level language for eBPF. We’ll dive right in with an example from the Reference Guide:

This creates a user-level return probe on the binary /bin/bash when returning from the function readline. Whenever the probe is hit, we simply print that we have read a line. If you run bash in a separate terminal and hit enter, after each newline you will see that bpftrace prints “read a line.”

What makes this magical is that we can do this for any binary and any function (although, note that it has to be a compiled language3). All this, without any changes to the binary, and minimal overhead (1-2 microseconds per probe in my testing)! And of course, since no changes to the binary are required, supporting this instrumentation has zero perf impact when it is not in use.

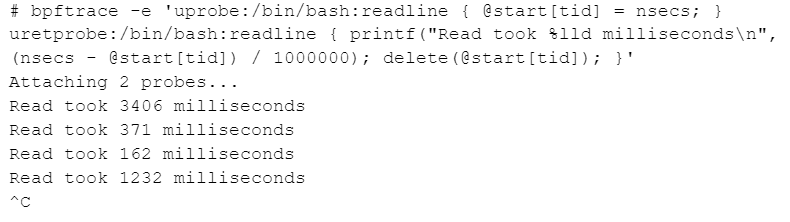

But now, we can extend this to answer the question we had earlier: how long is a function taking?

We record the start time of the readline function (in a map with thread ID as the key, to allow correctly tracking multiple threads/processes), and on return, we print the duration. You’ll see that the output matches how long bash was waiting for you to hit enter.

While eBPF and bpftrace are amazing, doing more in-depth analyses quickly gets complicated and time-consuming. For example, for a frequently executed function, we want to gather the average or a histogram over time rather than printing on every execution. And after seeing how long a particular function is taking, the natural next question to ask is, where is the time within the function going, and how long is a nested function taking? Furthermore, even things like translating function names to account for C++ symbol mangling (our file system is written in C++) add friction and not everyone is familiar with them.

A Wachy Way

This got me thinking: IDE-based debuggers like Eclipse or Visual Studio Code have made debugging quicker and more approachable for many developers, as compared to something like gdb. If we want to build a similarly accessible performance debugging tool based on eBPF, what would that look like? I named the tool wachy, a portmanteau of watch and tachymeter.

I had a clear set of personal requirements for wachy:

Show information in the context of the source code view. I know what I want to trace in my source code, the tool should be able to translate that into lower-level details.

Enable drill-down analysis. I can start with a particular function, and want to see within that function how long different lines are taking. I should be able to trace nested functions, potentially multiple levels deep.

Work in the terminal, i.e. be a TUI. We often have to debug remote machines where we just have SSH access. No additional port forwarding should be required.

At a first glance, these requirements seem impractical–if I want to trace a particular line in the source code, it may not have a well-defined mapping to real optimized assembly code. The compiler can reorder and interleave the code such that a particular source line maps to many disconnected instructions in the assembly, which would be impractical (due to both implementation complexity and high cumulative tracing overhead) to trace accurately. While in the general case that is true, a key observation/design choice is that for most things that need to be traced, they will be encapsulated within a function4. So within a function, if we want to trace how long different function calls are taking, there is a clear mapping for what to trace in the assembly: a call instruction (assuming the function isn’t inlined).

As for drilling down into nested functions, thanks to eBPF we can run arbitrary code on function entry or exit. This allows maintaining a per-thread state to track the level of nesting. eBPF also allows for filtering at runtime, supporting interesting use cases that can’t be done with any other tool. For example, you can either filter based on the arguments of each function, or the duration of time spent in a function - this makes it great for debugging tail latency.

Conclusion

Wachy has quickly become a core part of our performance debugging toolbox. It enables a new debugging approach: starting at a high-level function of interest, getting accurate latency numbers, and progressively drilling down further. The primary drawbacks are that it is rather fine-grained - you need to have some idea what you are looking for - and it only gathers data while running live, on a single machine. And of course, because it relies on eBPF, support is limited to Linux and compiled binaries. But the benefits are equally clear: no changes to your binary are required, there is minimal overhead (only when enabled) and it allows for powerful filtering.

Wachy has been very valuable for us, both for finding performance optimizations, as well as (perhaps just as importantly) understanding which parts of the code are already fast and avoiding premature optimization. You can check it out at https://github.com/rubrikinc/wachy. This is just the initial release and we look forward to hearing your feedback! I hope this post also familiarizes more people with eBPF user-level tracing - I am convinced that this revolutionary technology will have a big impact on the performance debugging landscape. Wachy is just one idea; I look forward to seeing what you build!