Part 1 of this series covered the challenges Rubrik initially faced with its metadata store. Our applications had to become quite complex to work around some of these challenges. We decided it was time to evaluate and switch to a different metadata store, and started building a database evaluation framework to help our decision process.

Evaluating a distributed database

We wanted to be sure that the new metadata store we chose:

Provides the required consistency guarantees even in the presence of node failures

Has good performance when under stress / high load

Easy to deploy and maintain

To do this, we built a consistency and load testing framework.

Consistency Tests

Our consistency tests were written to make sure that the database provides the required consistency guarantees even in the presence of a node/disk failure.

We built several application simulators to perform database operations and validate that the state of the database is what the application expects it to be. Some example application simulators we built were:

A distributed semaphore

There are semaphores defined in the database for different resource types.

Multiple processes try to acquire and release units of these resources.

There are periodic validations that there are no leaked/overcommitted resources.

Rubrik's distributed file system simulator

Multiple processes simulate creating, deleting, and updating of file and directory metadata stored in the distributed database.

Rubrik's job framework simulator

Multiple processes simulate scheduling, claiming, and running jobs with states stored in the distributed database.

Each of these simulators maintained the current expected state of the database in memory and ran periodic validations against the database. These simulators run against a multi-node cluster and randomly choose a live database node to run a query against.

Different kinds of failures were injected into the system during the consistency tests. These failures were injected into one node at a time. Some examples of failures injected are:

A hard reboot of a node

Kill the running database

Disk corruption - Intentionally corrupt the data files used by a distributed database

Fill disk for the database's data partition

Unplug the disk for the database's data partition

Clock jump - Jump the system time on a node forward / backward by a few hours

Clock drift - Slowly drift the system time on a node

During these failures, the application simulators continue to run pointing to live database nodes.

A certain class of failures was also injected into multiple nodes – more than the supported fault tolerance for our configuration – simultaneously and we verified that the database is unable to serve queries when this happens, proving CP in CAP theorem. Our consistency tests are open-sourced here.

Load Tests

We wrote another class of performance tests for evaluating the performance of a distributed database under high load. The performance tests were defined in a custom-built flexible framework. The framework supported wildcard query patterns and captured performance metrics at the end of the test. Some performance metrics we looked at were.

Query latencies and throughput

CPU utilization

Memory utilization

Disk stats (IOPS / bytes)

Network traffic

Our load tests could define high contention workloads - with reads and updates to the same row. The workloads (query patterns) were defined as JSONS (examples below).

{

"name": "point_select_all",

"transaction": [{

"query": "SELECT * FROM sqload.micro_benchmark where job_id = $1",

"num_rows": 1

}],

"params": {

"generators": [

"int_job_id"

]

},

"concurrency": 4,

"interval": "0s",

"latency_thresh": "15ms"

}

with generators for placeholders like $1 defined of the form

"int_job_id": {

"name": "int_uniform",

"params": {

"min": 1,

"max": 1000,

}

}

There are pre-defined generators for generating integers/strings of a particular distribution. The salt defines the order of random numbers generated to provide better query interactions. The workloads were run after an optional "populate" phase to populate initial data in the database.

When we found performance issues with our applications on a database, we used this framework to create a minimalistic reproduction of the problem based on the query pattern of the application. Once we had a reproduction of the issue, we were able to dig deeper into the root cause analysis of those problems.

Conclusion

After building our consistency and load test framework, we began our evaluation with CockroachDB. It passed all our sets of consistency tests and load tests so we did not need to test any other databases at that time. Our set of tests detected a few issues (Example A, Example B) which were promptly fixed by Cockroach Labs.

CockroachDB

CockroachDB is a distributed SQL database. CockroachDB provided a lot of features we found useful:

It is ACID compliant without the use of locks, as explained in this blog. It provides CP in the CAP theorem (which is what we want at Rubrik)

It provides cross table read-modify-write transactions

Auto heals on node crashes.

Performant and scalable secondary indices

Easy to deploy

CockroachDB Transaction internals

CockroachDB divides contiguous rows, based on primary keys, into ranges. Each range is n way replicated where n is configurable. It uses the Raft consensus protocol to keep the n replicas of a range in sync. Only a quorum (majority) of replicas are required for the raft cluster to function. At Rubrik, we use 5-way replication so that we are 2 replicas (node) fault-tolerant.

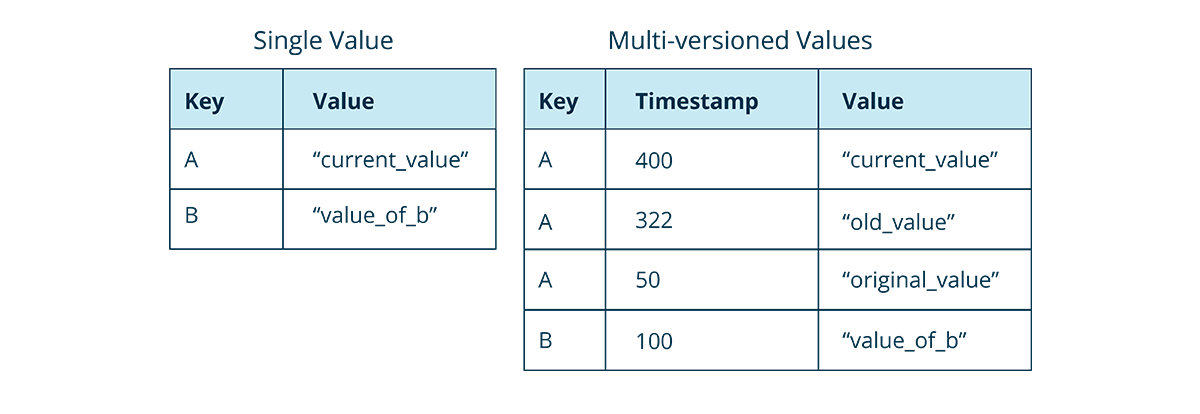

CockroachDB uses MultiVersion Concurrency Control (MVCC) to provide serializability for concurrent transactions.

Every update to a row creates a new copy of the row with the corresponding timestamp.

Read operations on a key return the most recent version with a lower timestamp than the operation. Stale or overwritten values of a row are eventually garbage-collected after its expiration defined by a config.

MVCC helps provide serializability of transactions - a global ordering of transactions. An ongoing transaction reads the value of a row as its start time. This also means that if the expiration of an MVCC value – the config mentioned above – is x minutes, no transaction can run for longer than x minutes.

Different ongoing transactions can conflict with each other before they commit. Here is how CockroachDB handles conflict resolution.

Migration to CockroachDB

We qualified CockroachDB using the above-mentioned consistency and load tests and found it worked well for our requirements.

Now, we wanted to switch the underlying metadata store we use in our clusters from Cassandra to CockroachDB. This was a challenge because we have a complex application stack - in C++, python, scala, bash, etc. and they all use Cassandra Query Language (CQL) to query the metadata store.

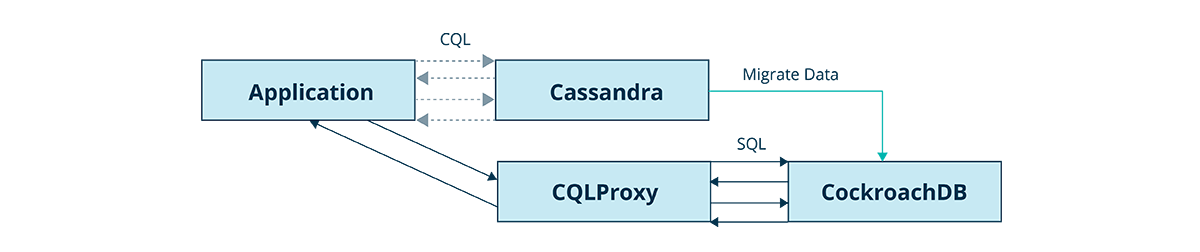

Changing all applications to use PostgreSQL (used by CockroachDB) would have been a daunting task. Instead, we built a CQL <=> SQL translator called CQLProxy and made our applications switch to querying CQLProxy (backed by CockroachDB). So migrating existing Rubrik clusters to CockroachDB involved:

One time migration of existing data from Cassandra to CockroachDB

Switch applications to use CQLProxy instead of Cassandra

Newly created Rubrik clusters directly used CQLProxy / CockroachDB without the need for any migration.

We originally planned to phase out all CQL apps over time and migrate completely to SQL but thanks to some advantages discussed later in this post we decided to keep it.

One time migration

All new Rubrik clusters can directly start on CQLProxy - CockroachDB. But for our existing clusters, we had to do a one-time migration of existing data from Cassandra to CockroachDB.

This happened as a part of upgrading the cluster.

The migration process involved:

For each table…

Read rows from Cassandra.

Run the row through the CQLProxy translator, where one row will result in one or more rows in SQL.

Write rows in a CSV, one for each table.

Import all tables in CockroachDB.

Run a validation to ensure data in CockroachDB (accessed through CQLProxy) matches Cassandra.

Point all applications to CQLProxy instead of Cassandra.

CQLProxy

We built a stateless translator layer called CQLProxy. Applications talk CQL to CQLProxy, and CQLProxy translates the CQL query into one or more SQL queries in CockroachDB. It then processes the response of those queries and returns a CQL response to the application.

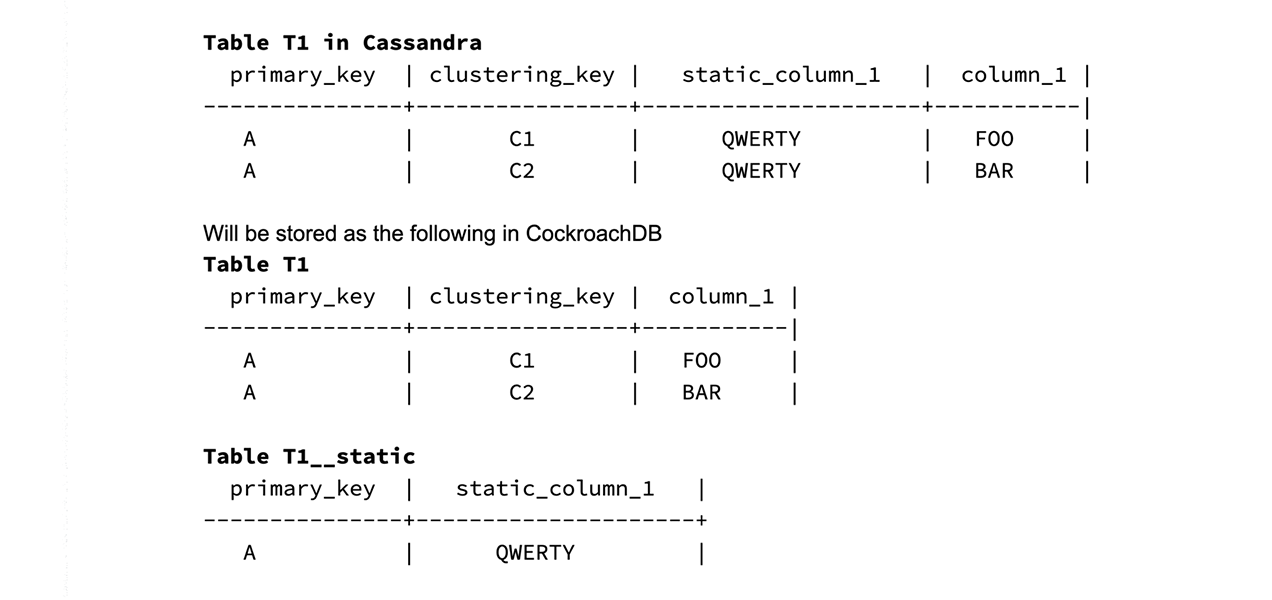

CQL schema has some extra features like static columns where a column has a single value for a partition key irrespective of the clustering key. Note that the primary key of a table is partition key + clustering key. CQL also has higher-order column types like map columns which do not exist in SQL. We implemented these features in CQLProxy by using extra tables in CockroachDB.

Below is an example of how a static column is translated by CQLProxy. If a table is represented as the following in CQL:

CQLProxy will translate the following CQL

SELECT * from T1 where primary_key = A;

to

SELECT * from T1 as A join T1__static as B on (A.primary_key = B.primary_key)

in SQL.

CQLProxy also supports richer transactions compared to what Cassandra supports. One example is that we added support for persisting rows across multiple tables in a single transaction. Cassandra only supports batch persists within a single partition key.

An additional advantage of having CQLProxy is that we can add features like "column spilling," where a single large column value in the CQL world can be stored in multiple rows in the SQL world, transparent to the client. This allowed us to scale to support large row sizes. Having large rows (of the size 10s of MBs) caused problems in CockroachDB due to backpressure, but with CQLProxy we can split them into multiple smaller rows transparently.

An example of where we have a large row updated frequently can be a table where we store job information. A job's configuration information could be a large JSON value, and a job's progress may need to be updated frequently. Note that the update frequency problem can also be solved by moving the progress information to a separate table, but this is just an example.

These additional features added to CQLProxy made application development over it easier.

Conclusion

CockroachDB is designed to be consistent (CP in CAP). It is designed to be easy to use and deploy, auto-heal in the presence of failures, and provides a SQL query interface. At Rubrik, we did our due diligence with evaluating the guarantees provided by CockroachDB with our own set of failure injection tests, which we've open-sourced here. We worked with the Cockroach Labs team on a few of the issues we found, and a big shout out to them for consistently working to make the product better for us. Working closely with the Cockroach Labs team, we were able to get a good understanding of their open-source codebase.

By using CQLProxy, we had to make zero changes to our application layers since the applications continue using CQL to talk to the metadata store. Without this, the complexity of changing application code to move from CQL to SQL combined with the complexity of migrating the underlying distributed database together might have been difficult to achieve in a short timeframe.

CQLProxy also has the added advantage that we can easily move to a different underlying database in the future without much effort from our side.

In the third and final part of this series, we will outline our experience with choosing the right metadata store.