The buzz around AI agents is undeniable. You can’t drive down a freeway in San Francisco without seeing a dozen billboards from the latest set of companies building new agentic tools. Agents are clearly the future and on a path to transform the enterprise. In fact, Gartner predicts that by 2028, a third of enterprise applications will use AI agents, and 15% of all decisions will be made autonomously.

At Rubrik, we wanted to understand the current, real-world state of agent adoption. So we spoke with more than 100 companies, 90% of them representing large enterprises across sectors such as healthcare, finance, and retail. Our goal was to answer two questions:

1. What is the current state of agent adoption in the enterprise?

2. What challenges do they face?

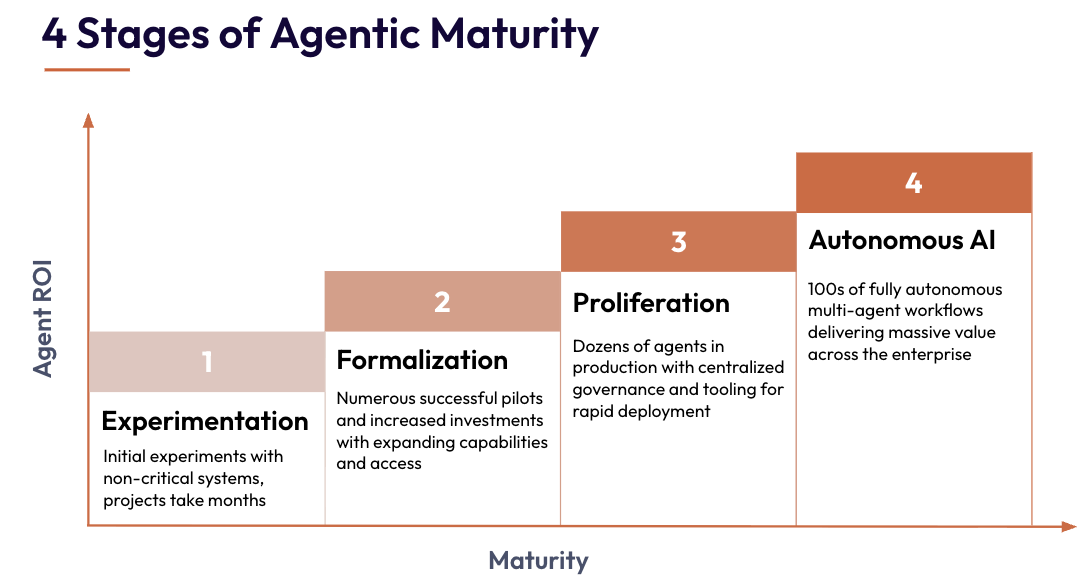

Our conversations revealed a clear pattern: the journey from a single proof-of-concept to a fleet of autonomous AI agents is a path riddled with security, data, and integration challenges. Indeed, organizations move through distinct stages of maturity, each with its own set of roadblocks and opportunities.

Navigating the difficult terrain of AI agent adoption requires guidance. So Rubrik developed a practical four-stage AI agent maturity roadmap to help you assess, plan, and accelerate your own autonomous AI journey.

Where Do Most Organizations Stand Today?

Based on our research, the majority of enterprises are in the early stages of their agentic journey. That being said, it was surprising to hear that nearly 25% had at least one agent in production.

What is the current state of agent adoption in your enterprise?

Not Yet Started: 11%

Experimentation: 51%

Formalization: 24%

Proliferation: 13%

Autonomous AI: 0%

Each stage has its own pitfalls, successes, and insights. Fortunately, we gathered excellent feedback directly from leaders on the front lines. Let’s explore.

Stage 0: Not Yet Started

While it’s clear that enterprise AI agents have arrived, not everyone is ready to fully implement them in production. But at this stage in the game, the adoption of agentic AI is no longer a competitive differentiator benefitting for early adopters. Only 11% of enterprises have yet to begin experimenting or implementing AI agents. The remaining majority have already embraced the technology, to some degree.

We have to face facts: the use of AI agents is the new reality. If you are stuck at Stage 0, you should read the following steps with great interest or you risk being left behind by your more forward-thinking peers.

Stage 1: Experimentation

This is where the agentic AI journey begins. At this stage, small teams or individual champions start exploring internal use cases. Agents are typically limited to read-only access and non-critical data to minimize risk.

What it looks like: A few isolated projects focused on low-risk productivity gains

Common Challenges:

Lack of in-house expertise in building and deploying agents

Limited access to appropriate tooling for building, managing and monitoring agents

No defined strategy or governance around AI agent usage

Success at this Stage:

Deploying two or three proofs-of-concept (POCs) into production, which can take several months

Example quotes from teams we talked to at this stage:

"We've got plenty of ideas floating around, but it's hard to know which ones are worth turning into real agent projects."

"I don't even know what security guardrails I should put in place in the first place."

Stage 2: Formalization

After a few successful pilots, investment increases. The organization begins to formalize its approach: establishing processes, standardizing tooling, and forming governance bodies. Agents may be granted write access or connected to additional applications for the first time.

What it looks like: An official AI governance committee forms, with representatives from IT, security, and engineering. Guidelines and approval processes are put in place.

Common Challenges:

Long approval cycles from governance committees

Difficulty evaluating agent performance and calculating ROI

A lack of visibility into what tools agents are using, what data they can access, and what actions they are performing in production

Success at this Stage:

Reducing project delivery timelines from months to weeks

Securing funding for broader investment in agentic AI

Example quotes from teams we talked to at this stage:

"We have an AI governance committee with IT and security, but it’s taking a long time for each project to be approved. We try to set guidelines, but in reality, we have no visibility into what's actually going on."

"We're starting to give agents write access, but it feels like a black box. I can't tell if agents are using data appropriately or if we're exposing ourselves to risk."

Stage 3: Proliferation

At this stage, dozens of agents are in production. Standardized tooling and protocols enable developers to launch new agents more rapidly. The organization has some observability in place, logging agent actions and measuring performance.

What it looks like: A growing fleet of agents are actively used across different teams and the focus shifts from building AI workflows to managing and securing agents at scale.

Common Challenges:

Poor visibility into exposure from external threats or sensitive data leakage

Lack of control over agent identity and authentication, often leading to over-permissioned access

No clear remediation plan if an agent is hijacked or performs destructive actions

Success at this Stage:

Deploying agents in days instead of weeks, thanks to established guardrails, observability, and remediation capabilities

Example quotes from teams we talked to at this stage:

"We're struggling to enforce least privilege access for agents. They often have way more permissions than they actually need."

"Securing AI agents is tough. They're still immature, the integrations are messy, and the API ecosystem is fragmented. The industry is moving faster than we can put protections in place."

Stage 4: Autonomous AI (The Aspirational Goal)

This is the desired end state. Hundreds or thousands of AI agents and multi-agent workflows deliver massive, quantifiable value across the entire enterprise. End-to-end business processes are automated with minimal human intervention and a mature governance platform ensures every agent is safe, secure, and aligned with business goals.

What it looks like: The use of AI agents is deeply ingrained in the company culture, driving a significant competitive advantage.

Common Challenges:

Achieving "last mile" accuracy, which often requires fine-tuning specialized models

Managing the cumulative inference latency from multi-agent workflows in real-time use cases

Scaling cost-effectively, as token consumption in complex workflows can become prohibitive

Success at this Stage:

A clear, measurable connection between agent deployment and key business metrics

Agents are a primary reason you are outperforming competitors

How to Plan Your Journey and Avoid the Landmines

Our research revealed one overarching bottleneck that holds organizations back as they mature: a lack of visibility and control.

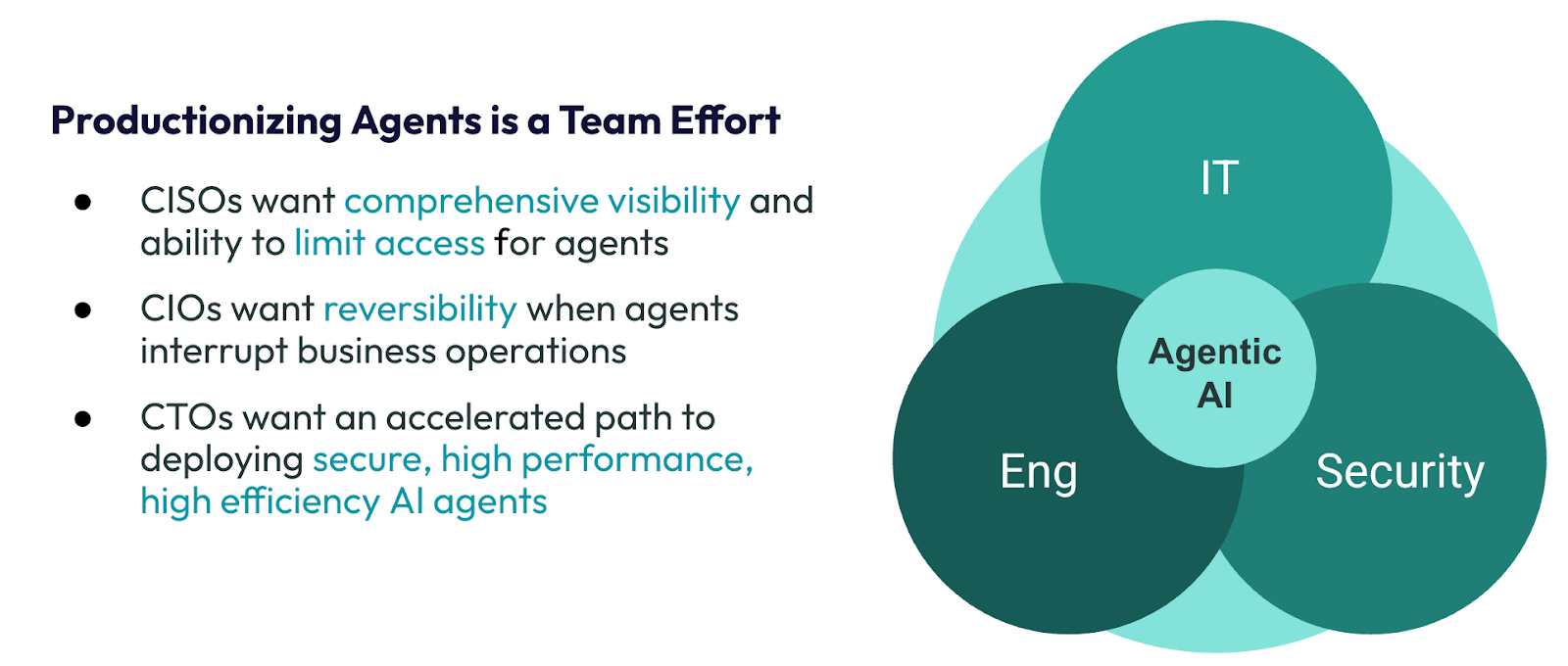

Getting agents into production is a cross-functional sport: CISOs need better visibility and control, CIOs want recoverability for production environments, and CTOs need the tooling to let their teams build AI fast and efficiently.

When agents can modify code, trigger APIs, and access sensitive data, hallucinations are no longer just an accuracy problem—they become an executed action that can have real-world consequences. We’ve already seen public cases of agents deleting company databases or creating phantom user roles.

So, how can you provide IT and security teams with the peace of mind to unblock developers? How can you proactively detect destructive actions, protect sensitive data and recover if an agent goes awry? The answer lies in a new class of tooling focused on agentic governance. As you plan your path to autonomous AI, look for solutions that provide four critical capabilities:

Agent Discovery: A unified registry for all your agents, whether custom-built or from third-party platforms. You need to see the identities they use, the tools they can access, and the applications they interact with. For example, Rubrik Agent Cloud automatically discovers all your agents and creates a visual map of all agent actions for continuous monitoring and historic auditing.

Risk Monitoring: The ability to understand the "blast radius" of each agent. This means proactively identifying over-permissioned access, detecting sensitive data exposure, and flagging risky agent behaviors before they cause harm.

Remediation: More than just observability, you need control. This includes the ability to roll back destructive actions by restoring data, as well as setting fine-grained guardrails to block access to specific tools, applications, or identities. As an example Rubrik Agent Rewind, a feature in Rubric Agent Cloud, provides the undo button you need when agents misbehave with the ability to:

Trace every agent action from prompt to execution to understand precisely what happened and why

Alerts when policies are violated or agents take risky actions.

Rewind high-impact agent errors instantly, undoing changes to data and configurations without downtime

Continuous Improvement: To reach the final stage of autonomous AI, you need to unlock greater performance and accuracy. The ability to measure agent performance and manually or automatically fine-tune agents on real-world data and human feedback is key to conquering the "last mile" problem.

The journey toward autonomous AI is a marathon, not a sprint. But you can navigate the complexities of security, governance, and scale with confidence by understanding where you are on the maturity map and planning for the challenges ahead.

Learn more about how to deploy trustworthy reliable agents into production: