At Rubrik, our products simplify protection for a variety of workloads--virtual machines, databases, physical servers, cloud, and more. Each type of workload has a different data format and a different mechanism to ingest data, and managing each of these data formats separately can be cumbersome.

In this blog, we’ll discuss how Rubrik addresses this challenge via abstractions to store snapshots from these workloads in a unified format.

Introduction to BlobStore

BlobStore is Rubrik’s data maintenance engine. It provides an abstraction for storage and snapshot data maintenance on the Rubrik Cluster. This helps Rubrik store snapshot data in a uniform fashion irrespective of the type of workload it's from. Rubrik performs incremental-forever backups, meaning that it takes the first snapshot in full, but only incremental changes for any subsequent snapshots. But as older snapshots are deleted and data is deduped across different workloads, it becomes hard to manage how this data is associated with the snapshots. BlobStore provides this separation by abstracting out the data storage from its snapshot. Now, applications can perform operations on snapshots without worrying about how the underlying data for that snapshot is stored.

Let’s get familiar with the BlobStore terminology:

Blob/Blob Representation: Physical representation of the smallest unit of data (e.g. a virtual disk) in a snapshot. When we take a snapshot, the data is stored in one blob per unit of data (e.g. one blob per virtual disk).

Content: Logical representation of the data stored in blobs. A snapshot consists of one content per unit of storage in the primary workload. Content can be represented by multiple blobs.

Group: The unit of storage backed up on each location where a snapshot copy is stored. Each group stores the data corresponding to the unit of storage in the form of a chain of blobs. For VMware workloads, we create one group per virtual disk on every location where a snapshot’s data is stored.

Chain/Blob Chain: A sequence of blobs in which the head of the sequence stores the full data while the subsequent blobs only store incremental data on top of the previous blob.

Rubrik exposes a simple API for clients to create, delete, and pin/unpin contents to prevent garbage collection of deleted contents.

Once the applications have created the contents, there is no need to worry about the representation of the contents. They can use the content identifier to perform any operations without considering how the corresponding blobs are stored in BlobStore.

BlobStore internally executes multiple maintenance operations to minimize space usage and make data access efficient.

Consolidation

Consolidation removes the blobs corresponding to deleted contents and consolidates the data in such blobs into the dependent blobs.

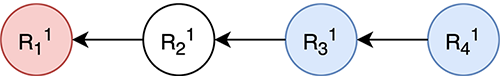

Here’s an example of consolidation with a chain that has four contents (represented as R11 to R41):

Here, Rxy represents the xth representation in Group y.

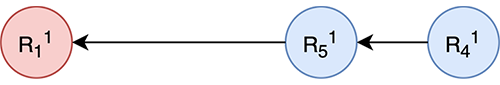

When the content of R21 is deleted, there’s no need for the R21 blob anymore. But since R31 and R41 depend on R21, we can’t just delete it. So, instead, we merge the data in R21 and R31 to create R51, a new blob representation, for the third content. This merging is called consolidation.

Cross

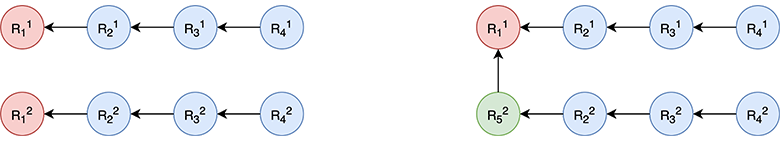

In the previous sections, you saw how the deduplication works for snapshots of a single unit of data. Rubrik also dedupes fulls in different chains against each other using a strategy called cross. Crossing is done by running a process for each group having a full to check if there is another full with enough similarity that can be used as the cross base. Once such a base is found, the data is deduped against it, making the full an incremental on top of the base.

In the above example, we found blob R11 as a cross base for blob R12 and converted R12 into the incremental blob R52 on top of R11.

To get the most effective data reduction via dedupe, it’s critical to find the best cross base, which is the most similar to the blob being crossed. At Rubrik, we have developed various algorithms to compute a similarity hash of the content based on the type of workload. This similarity hash is used to identify the base that can give the best dedupe.

Conclusion

Building the right abstractions can help simplify your architecture. Here, we saw how we separated the data layer from the snapshot metadata, which made it very simple for us to do any kind of data manipulations under the hood without the application layer needing to worry about how the data for their snapshots is stored.

Want to learn more about the technology behind Rubrik? Check out these engineering blogs:

Building an Error Message Framework