At Rubrik, we rely on a multi-tenant architecture to store customer metadata in a large fleet of Cloud SQL database instances. With numerous production deployments globally (each supporting multiple customer accounts), maintaining high availability, performance, and robustness across this infrastructure is critical.

Managing a large fleet of Cloud SQL instances and ensuring they remain resilient and performant has been a journey filled with valuable lessons. So I’ve written a three-part blog series to share with other technical practitioners and strategists some of the best practices our team developed in the process. Those lessons include:

Part 1: Monitoring and Consistent Configuration

Part 2: Design Choices and Optimizations

Part 3: Automated Scaling, Upgrades, and Lessons Learned from Incidents (available April 2025)

Monitoring: The Foundation of High Availability

Metrics Matter

The journey to high availability starts with robust monitoring—after all, you cannot improve what you do not observe. At Rubrik, we use Google Cloud Platform’s (GCP) native Cloud SQL metrics, which offer foundational insights. But we take a multi-layered approach to monitoring that goes beyond native tools to capture the depth and detail we need for proactive management.

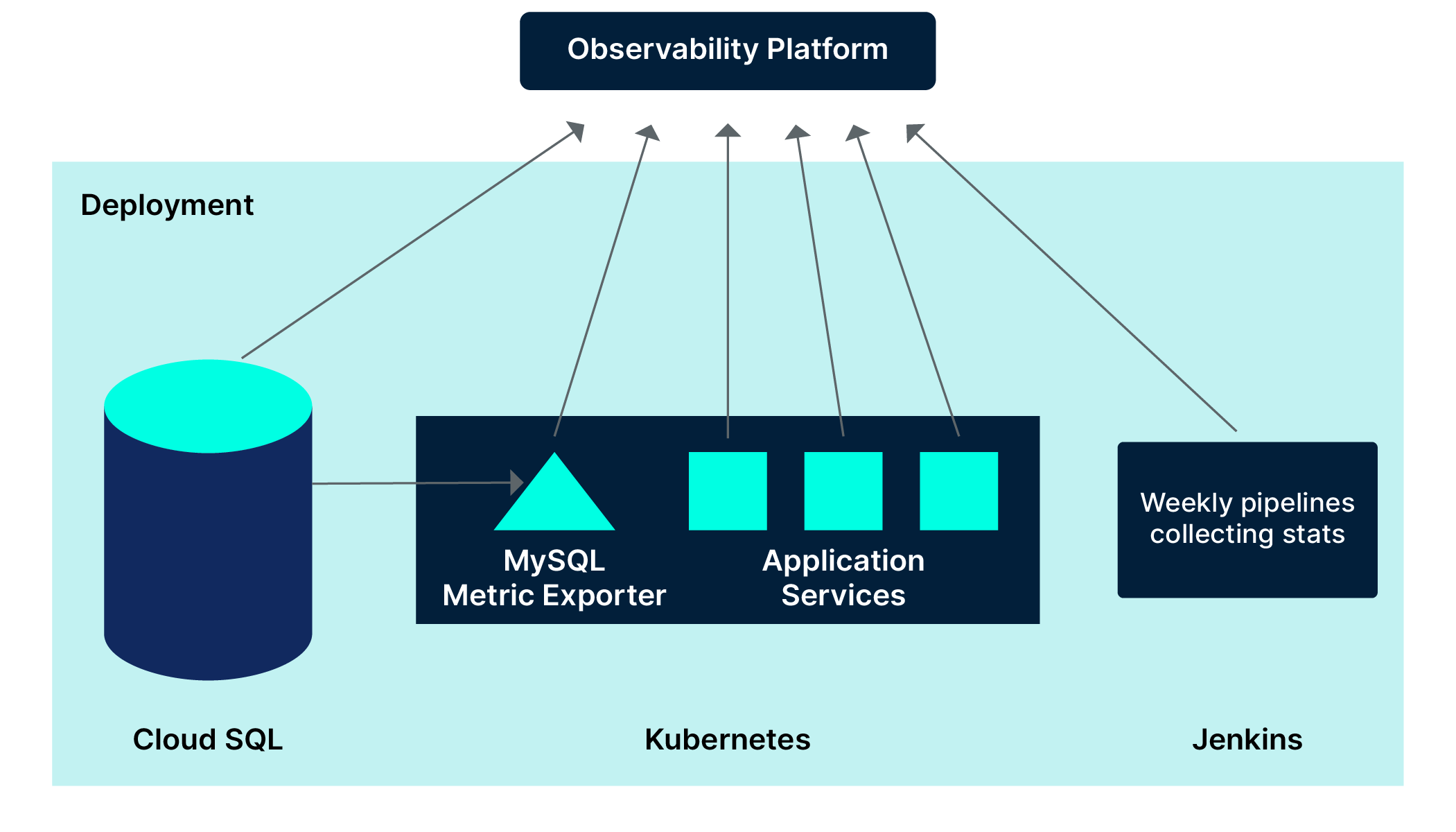

Indeed, we extend native metrics with a custom metric exporter that runs as a Kubernetes service on Google Kubernetes Engine (GKE). This exporter captures additional insights that aren't natively available in Cloud SQL, filling critical visibility gaps.

To capture granular details at the query level, we also built a custom wrapper around the SQL driver used by our services, which emits detailed metrics on every query. This provides us with actionable insights into query performance and helps us identify and address inefficient queries before they impact performance and health of database servers.

For even more observability, we’ve enabled MySQL Performance Schema across our fleet, giving us access to fine-grained details on memory usage internals, per-database load, and lock contention. The metric exporter taps into this schema to collect critical information that allows us to monitor and optimize at both a high level and in-depth.

Additionally, we run weekly pipelines that aggregate statistics on table size growth across the entire fleet, helping us keep an eye on data bloat, potential indexing issues, and other space-related concerns. Together, these data streams provide us with a 360-degree view of our production database instances, laying the foundation for the stability, performance, and availability that our customers expect.

Automatic Debug Information Collection

When an alert fires, every second counts. To minimize response time and ensure effective troubleshooting, we’ve implemented an automatic debug information collection pipeline. This pipeline is triggered whenever an alert is raised, instantly gathering essential debug data from the affected Cloud SQL instance.

This automatic process significantly accelerates root cause analysis (RCA) and reduces the mean time to resolution (MTTR) to minutes, as opposed to several hours or even days of waiting for issue recurrence. This allows our team to focus on diagnosing and resolving the issue rather than spending time manually collecting logs and diagnostic data. Having the relevant information upfront allows us to jump straight into incident analysis, ultimately improving the reliability and resilience of our database fleet.

Incorporating this kind of automated data collection not only optimizes our response to incidents but also feeds into a continuous improvement loop. With a more complete dataset on hand, we can better analyze incident patterns, proactively refine alerts, and implement preventive measures to reduce recurrence.

Alerts: Proactive Monitoring

In our pursuit of high availability, alerting plays a pivotal role in proactive monitoring and early detection of issues across our database fleet. We've configured a wide range of targeted alerts, each designed to monitor key performance indicators and operational health metrics across the databases. These alerts serve as our early-warning system for our teams, helping them address potential problems before they escalate.

Some of the critical alerts we’ve established include:

Uptime/Availability

Resource utilization on cpu, memory, storage, connections

InnoDB history list length (HLL)

InnoDB row locking

InnoDB redo log usage percentage

MySQL partition management

In addition to these primary alerts, we have several alerts dedicated solely to proactive debug information collection. These special-purpose alerts allow us to gather critical data as soon as anomalies are detected, capturing a snapshot of the database's state during early-stage incidents. Having these alerts in place allows us to streamline future root cause analyses and mitigate issues before they develop into critical failures.

By strategically configuring and fine-tuning these alerts, we maintain a proactive stance on database health, helping us catch and resolve brewing issues before they impact performance or availability.

Weekly Review of Metrics

A key part of our monitoring strategy is the weekly review of top metrics. Each week, our team gathers to examine a curated metrics dashboard that reflects the health and performance of the entire production fleet. This regular review serves multiple purposes:

Fostering Familiarity: By consistently engaging with production metrics, our engineers gain an intimate understanding of normal patterns and behavior across our database instances. This familiarity is crucial for quickly identifying deviations during incidents, ultimately reducing response times.

Boosting Confidence: Regular exposure to real-time data builds our engineers’ confidence in handling issues under pressure. When incidents occur, they can rely on their experience and insights from these reviews to make informed decisions faster.

Detecting Trends and Early Warnings: Weekly reviews often reveal subtle shifts in metrics that may not yet trigger existing alerts. Identifying these trends early allows us to address potential issues proactively, whether by adjusting configurations, tuning queries, or adding new alert conditions to cover gaps in our monitoring strategy.

These weekly sessions are more than just a health check—they’re an opportunity to continuously improve our monitoring setup and sharpen our team’s incident response capabilities. By regularly refining our approach based on data and observations, we’re able to stay one step ahead of potential issues and ensure the ongoing reliability of our production fleet.

Consistency of Configuration: The Backbone of Stability

In a multi-tenant environment where customer needs vary widely, configuration consistency becomes essential for maintaining predictable performance across our fleet of CloudSQL instances. To ensure this, our team has established standardized policies for every critical server configuration. This approach prevents discrepancies that could lead to unpredictable behavior, costly outages, or performance degradation.

Our guiding principle is "Look at the forest, not just a tree." Rather than making isolated fixes, we evaluate each server parameter in the context of the entire fleet. This holistic mindset allows us to fine-tune configurations for the collective performance of hundreds of databases, proactively eliminating potential issues before they impact any one instance.

For example each CloudSQL instance can have numerous tables per customer, which could quickly exhaust available table definition cache. By increasing the table definition cache size across the fleet, we avoid bottlenecks without needing to manage individual instances. Similarly, we size servers based on anticipated load to achieve an optimal balance of cost and performance.

Some of the key parameters we’ve standardized include:

Number of connections

Innodb redo log file size (innodb_log_file_size)

Innodb purge variables (innodb_max_purge_lag, innodb_max_purge_lag_delay, innodb_purge_batch_size)

MySQL binlog configuration (max_binlog_size)

Temporary log file size (innodb_online_alter_log_max_size)

Table cache sizes (table_open_cache, table_definition_cache)

By codifying and enforcing these configurations, we achieve uniform performance and reliability across our global deployments, enabling our engineers to manage the fleet efficiently.

What’s Next?

Our next installment in this series will focus on the optimization and design choices we made to optimize performance of our SQL instances. I’ll demonstrate how optimizing queries during development and refining them in production reduces costly fixes, boosts developer productivity, and ensures seamless database performance at scale.

If you don’t want to wait for the next installment, you can always download the series as a single PDF.

One More Thing…Shout Out to the Team!

None of this would be possible without the relentless efforts of our incredible platform teams. Shout out to the following pros!

Platform database: Rajorshi, Travis, Gabriel, Rahul, Yashwanth, Sudip, Anmol, Gurneet, Hardik

SRE: Prabudas, Suraj, Mihir

The expertise and dedication of these team members have been instrumental in scaling Rubrik's database fleet to support our ever-growing ARR. Your commitment to excellence and innovation is what drives us forward. Thank you for being the backbone of our success!