There are few things that are certain in our universe, but encountering a failure of an IT system is certainly one of them. Whether the system itself fails or experiences an external problem such as a power failure or natural disaster, everyone has or will experience a system failure during their career. As technology has evolved, so have the mechanisms to handle and recover from those failures.

Rubrik is designed to safeguard against these failures to ensure your data is always accessible and recoverable. We talked about our fault-tolerant technology in a previous post on erasure coding. In this blog post, I’ll review a few other key components of Rubrik Cloud Data Management (CDM) and how they address different failure scenarios.

Progression of Resilience

For backup and recovery solutions, there should be a progression of resilience that extends from data storage all the way through the stack to the services managing that data. Since these solutions are often the last line of defense against a disaster such as a ransomware attack, it is paramount to ensure your data is safe and secure. Let’s take a look at how Rubrik CDM does that:

Rubrik CDM was engineered with an immutable file system called Atlas. Being immutable means that once data has been written, it cannot be read, modified, or deleted by clients on your network. To learn more about our immutable architecture, take a look at this blog by Chris Wahl.

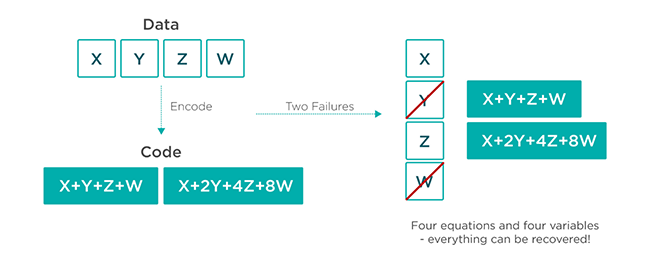

Another important aspect of Atlas is how it writes data to disk and handles failures. This is referred to as erasure coding. Erasure coding is a method of storing redundant data to make it recoverable from storage failures.

There are different ways to implement erasure coding, and you can learn more about the Reed Solomon method that Rubrik uses in our blog. To summarize, using this method of erasure coding allows us to take the middle ground when addressing the tradeoff between storage overhead (storing the redundant bits of data to recover) and availability. This gives customers the best of both worlds and makes more storage available for protecting data, reducing the total cost of ownership and ensuring the system is tolerant to disk failures.

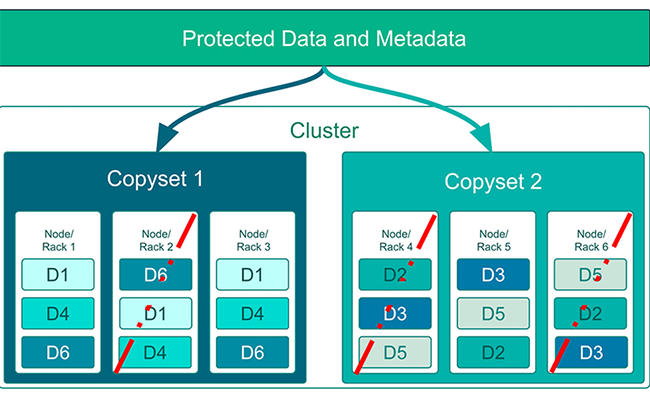

Speaking of hardware failures, what if something more catastrophic happens? Recall that Rubrik CDM is generally deployed in one or more clusters with each cluster consisting of at least four nodes. Each node is made up of storage, network, and compute resources. When Rubrik CDM ingests data from your environment, Atlas writes the data and metadata using erasure coding across disks, nodes, and clusters. When disks fail, and they eventually will, Atlas and erasure coding allow for data availability and self-healing. So, whether it is one disk that fails or multiple disks within a node or across a cluster, automatic self-healing mechanisms kick in and intelligently rebuild data sets on available disks. So, even prior to failed disks being replaced, the cluster will be tolerant to additional failures–provided sufficient capacity exists. Then, when the failed disks are replaced, that capacity is automatically added back into the system and data is rebalanced.

Large, distributed systems face the problem that the probability of multiple, simultaneous node failures actually increases with the more nodes you have. (For a great explanation and details with the math, check out The probability of data loss in large clusters by Martin Kleppmann.) One way to reduce the probability of such failures is to reduce the self-heal time. Rubrik CDM can typically self-heal in less than an hour. For a second method, Rubrik CDM 5.1 introduced a new architectural enhancement called Copysets, which are collections of metadata that are intelligently distributed across nodes. The use of Copysets results in about a 50x reduction (roughly 1 in 1 billion) in risk of data loss when 2 nodes are lost at the same time in a large deployment versus clusters without Copysets.

Continuing to move through the progression of resilience, we have the system that is orchestrating many of the previously talked about mechanisms. Rubrik CDM is powered by a component called Cerebro, a distributed, intelligent system that controls scheduling and data movement across the cluster. Data ingestion, lifecycle, task scheduling, and metadata composition are all controlled by Cerebro, so it is vital that it be as available and resilient as possible.

For catastrophic failures, such as the loss of an entire data center, it is critical to have data replicated to different geographical locations. It is also crucial to have the intelligence and orchestration built in to allow for an efficient return to operations. Cerebro powers data replication to secondary locations such as a DR site or Public Cloud. With Rubrik’s robust SLA Domains, Cerebro is the secret sauce that makes sure data is where it needs to be, when it needs to be, and with enough resilience to meet its assigned policy.

Conclusion

Rubrik CDM has several key components that help customers address failures. Our immutable file system, Atlas, paired with Cerebro’s intelligence drastically reduces the risk of data or service loss when failures occur. And while distributed systems can be built to address many of these same challenges, Rubrik CDM provides the best progression of resilience that reduces risk, self heals, and allows administrators to be confident that their last line of defense in a disaster will be ready when the time comes.